🎯 TL;DR - Key Takeaways

- ✅ August 1, 2026 is 6 months away - compliance countdown is critical

- ✅ Penalties up to €35M or 7% global revenue already active since August 2025

- ✅ February 2025 prohibitions now enforced - decommission banned systems

- ✅ Post-Market Monitoring templates due February 1, 2026

- ✅ Quality Management Systems (QMS) mandatory for high-risk AI

- ✅ Even if deadlines extend, AI governance = enterprise trust = market access

👥 Who Should Read This:

- The 2026 Deadline: Why the Countdown Started Yesterday

- Risk Classification: What Systems Are Regulated?

- Regional Enforcement: France, Germany, Italy, Netherlands

- Sector-Specific Compliance Deep Dives

- Technical Documentation & Transparency

- Governance: The AI Office and Scientific Panel

- The Cost of Non-Compliance: Penalties & Fines

- Your Action Plan: Moving Toward Trustworthy AI

- 5 Critical Mistakes Enterprises Make

1️⃣ The 2026 Deadline: Why the Countdown Started Yesterday

#EUAIAct #ComplianceDeadline #EnterpriseAI

The regulatory landscape for AI in the European Union has fundamentally shifted. Following the EU AI Act's entry into force on August 1, 2024, enterprises are now in a critical 6-month sprint to the August 1, 2026 general applicability deadline.

⚠️ Critical Context:

We're in February 2026. The compliance countdown isn't theoretical—it's happening now.

The Tiered Transition Timeline

The EU AI Act does not apply all at once. It uses staggered implementation, meaning specific chapters became enforceable long before the 2026 general deadline.

| Date | Chapters/Articles | Scope | Status |

|---|---|---|---|

| Feb 1, 2025 | Chapters I & II (Art. 1–5) | General definitions and Prohibitions | ✅ ACTIVE NOW |

| Aug 1, 2025 | Chapters III (Sec. 4), V, VII; Art. 99–100 | Notified Bodies, GPAI Rules, Governance, Penalties | ✅ ACTIVE NOW |

| Feb 1, 2026 | Secondary Legislation | Post-Market Monitoring Template Deadline | ⚠️ 4 DAYS AGO |

| Aug 1, 2026 | General Applicability | PRIMARY DEADLINE - Most AI Act provisions | ⚠️ 6 MONTHS AWAY |

| Aug 1, 2027 | Art. 6(1), Annex I | High-Risk systems under existing Union harmonization laws | 18 months away |

| Aug 1, 2030 | Article 111 | Public Authorities and Large-scale IT systems | Extended timeline |

⏰ Critical Insight:

Enterprises must begin auditing systems immediately—particularly those that may fall under prohibited categories requiring decommissioning by February 2025 (already passed) or those requiring QMS implementation by August 2026 (6 months away).

💡 Beyond Regulatory Compliance:

Even if the August 2026 deadline is extended (highly unlikely), AI governance, transparency, and accountability are now permanent enterprise requirements. Your clients, partners, insurers, and regulators demand it today, regardless of statutory deadlines.

2️⃣ Risk Classification: What Systems Are Regulated?

#RiskAssessment #HighRiskAI #ProhibitedPractices

The AI Act adopts a risk-based approach, subjecting systems to requirements proportional to their potential harm.

🔴 Unacceptable Risk (Prohibited Practices)

Effective February 1, 2025 (already active), practices listed under Article 5 must be phased out:

- ❌ Subliminal Techniques: AI manipulating behavior beyond conscious awareness

- ❌ Exploitative Systems: Targeting vulnerabilities (age, disability)

- ❌ Social Scoring: Classification by public authorities based on social behavior

- ❌ "Real-time" Biometric Identification: Remote identification in public spaces for law enforcement (narrow exceptions apply)

Penalty: Up to €35 million OR 7% of global annual turnover, whichever is higher

🟠 High-Risk AI Systems (Annex III)

Systems used in critical sectors must comply with rigorous obligations:

Sectors:

- 💼 Employment & HR: Recruitment, performance evaluation, promotion decisions

- 💰 Credit Scoring: Creditworthiness assessment, loan decisions

- 🏥 Healthcare: Medical diagnosis, treatment recommendations

- 🎓 Education: Student evaluation, admission decisions

- 🏗️ Critical Infrastructure: Energy, transport, water supply

- ⚖️ Law Enforcement: Predictive policing, risk assessment

- 🛂 Migration & Border Control: Visa decisions, asylum applications

Requirements:

- ✅ Quality Management System (QMS)

- ✅ Technical documentation (Annex IV)

- ✅ Post-Market Monitoring (PMM)

- ✅ Human oversight mechanisms

- ✅ Transparency and explainability

- ✅ Risk management procedures

Penalty: Up to €15M OR 3% of global turnover

🟡 General Purpose AI (GPAI) & Legacy Systems

GPAI models (e.g., LLMs like GPT, Claude, Gemini) face specific transparency and technical documentation rules effective August 1, 2025 (already active).

Legacy Provisions:

- • GPAI models on market before August 1, 2025 have until August 1, 2027 to comply

- • AI systems in large-scale IT (Annex X) placed before August 2027 have extension until August 1, 2030

3️⃣ Regional Enforcement: France, Germany, Italy, Netherlands

#NationalEnforcement #LocalRegulation #EUMemberStates

While the AI Act is EU-wide, local supervisory authorities and enforcement priorities vary significantly.

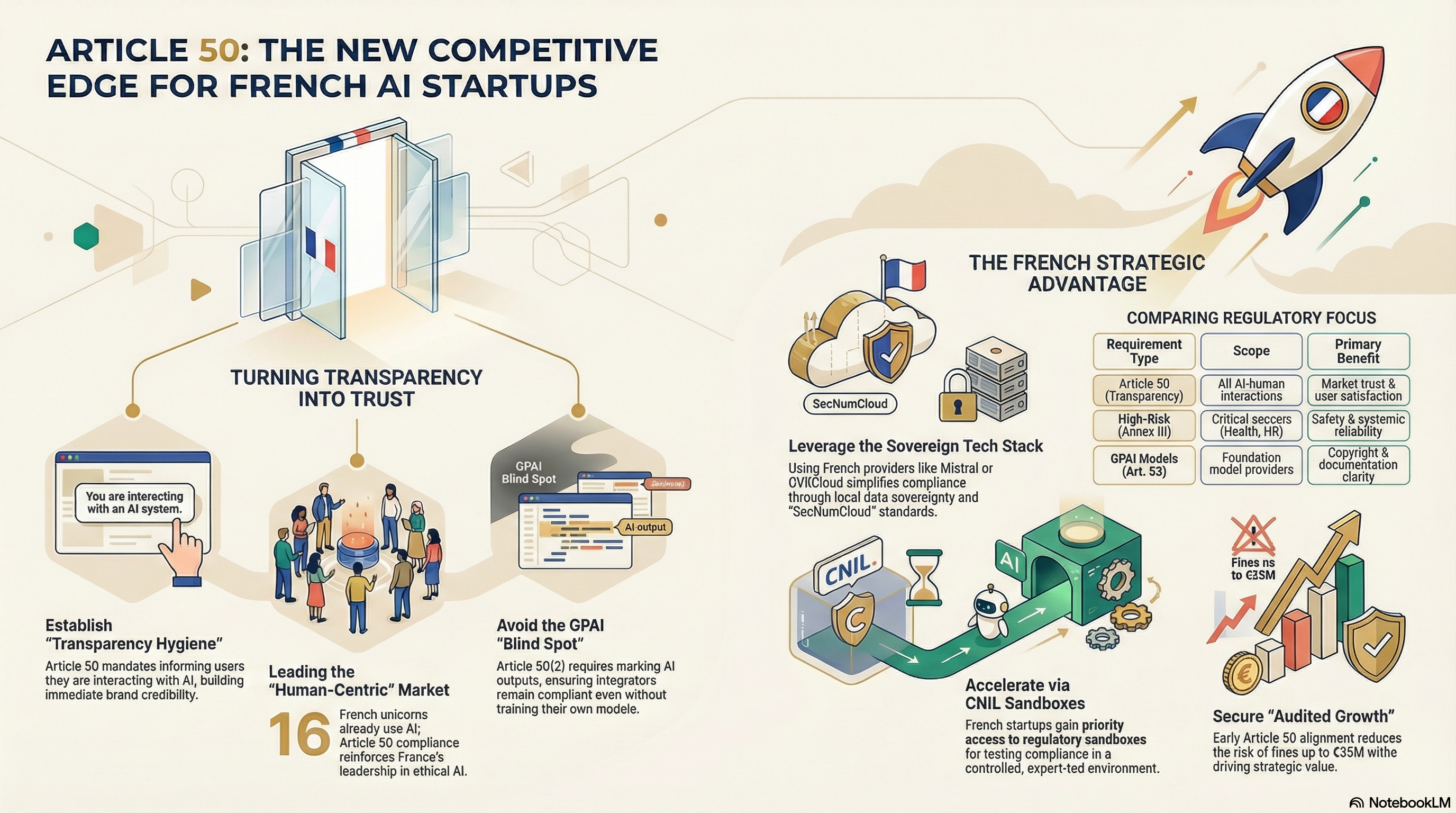

🇫🇷 France

Supervisory Authorities:

- • CNIL (Data Protection & Privacy)

- • INESIA (National Institute for Evaluation and Safety of AI) - established 2025

Enforcement Focus:

- • Digital sovereignty policy

- • €2.5 billion AI industry investment

- • Safety and national security

Unique Guidance:

- • CNIL guides for "AI-augmented cameras"

- • Recommendations for generative AI deployment

- • Emphasis on European-hosted infrastructure (SecNumCloud)

Strategic Advantage: Paris-based firms have direct access to INESIA for localized compliance guidance

🇩🇪 Germany

Supervisory Authorities:

- • Bundesnetzagentur (Federal Network Agency) - general surveillance

- • BaFin - financial sector

Enforcement Focus:

- • Workplace co-determination rights

- • Works council involvement in AI deployment

💡 Actionable Insight: Employers can implement technical barriers preventing access to user prompts, thereby avoiding certain co-determination triggers

🇮🇹 Italy

Supervisory Authority:

- • IDPA (Italian Data Protection Authority)

Enforcement Focus:

- • Most aggressive EU enforcer

- • Age verification to protect minors

- • Historical fines: OpenAI (€15M initially), Replika/Luka Inc. (€5M)

Unique Guidance:

- • National AI Strategy (2024–2026) emphasizes human capital

- • Heavy scrutiny of consumer-facing AI

🇳🇱 Netherlands

Supervisory Authority:

- • Dutch Data Protection Authority (AP)

Enforcement Focus:

- • High sensitivity to algorithmic profiling

- • Post-Tax Administration scandal awareness (fraud-detection causing financial hardship)

Unique Guidance:

- • "Non-discrimination by design" principles

- • E-learning for government algorithms

4️⃣ Sector-Specific Compliance Deep Dives

#SectorCompliance #FinancialServices #HRCompliance #Healthcare

💰 Financial Services

AI in internal controls or investment services must be overseen by qualified personnel.

Key Requirements:

- • Algorithm accountability frameworks

- • Bias prevention in credit-risk predictive models

- • Harmonization with MiFID II requirements

Authorities:

Germany's BaFin, France's AMF

⚠️ Financial AI is typically high-risk under Annex III

💼 Human Resources & Employment

AI for recruitment or worker evaluation is generally High-Risk.

Mandatory Requirements:

- ✅ Transparency: Notify candidates of AI use

- ✅ Human oversight: No fully automated rejection decisions

- ✅ Non-discrimination: Align with General Act on Equal Treatment (Germany), French Labour Code

- ✅ Explainability: Candidates must understand decision factors

Common Violations:

- • Automated CV screening without human review

- • Bias in candidate ranking algorithms

- • Lack of transparency in AI-driven evaluations

🏥 Healthcare / Medical Devices

AI medical devices face dual compliance: EU AI Act + Medical Devices Regulation (MDR) 2017/745

Additional Requirements:

- • Clinical validation and safety testing

- • Post-market surveillance (stricter than standard PMM)

- • Incident reporting to both AI authorities and medical device regulators

Regulatory Sandboxes:

- • EU encourages testing in controlled environments

- • Note: UK's MHRA "AI Airlock" is separate (UK is third country)

5️⃣ Technical Documentation & Transparency: The Compliance Foundation

#TechnicalDocumentation #AnnexIV #Article50

Compliance for High-Risk systems rests on Annex IV (Technical Documentation) and Article 50 (Transparency).

Core Annex IV Documentation Requirements

Must Include:

- 📋 System Description: Architecture, design specifications, intended purpose

- 📋 Training Data: Specifications, validation datasets, data governance

- 📋 Risk Management: Assessment procedures, mitigation measures

- 📋 Human Oversight: Mechanisms, responsibilities, escalation paths

- 📋 Performance Metrics: Accuracy, robustness, cybersecurity measures

- 📋 Compliance Evidence: Conformity assessment records

⚠️ Critical Deadline:

February 1, 2026 - Commission published Post-Market Monitoring template

Article 50 Transparency Requirements

AI-Generated Content (Deepfakes):

- ✅ Clear labeling as artificially generated

- ✅ Machine-readable indicators

- ✅ Public ability to distinguish from authentic content

AI System Interaction:

- ✅ Users must know they're interacting with AI (chatbots, virtual assistants)

- ✅ Exception: obvious context where AI use is clear

Quality Management System (QMS) Checklist

Essential Components:

- Strategy for regulatory compliance

- Data management procedures (including bias testing)

- Risk management framework

- Human oversight protocols

- Post-market monitoring plan

- Incident reporting procedures

- Technical documentation maintenance

- Regular audits and updates

💡 SME Consideration:

Commission provides simplified documentation forms for micro and small enterprises to reduce administrative burden

6️⃣ Governance: The AI Office and Scientific Panel

#AIOffice #EUGovernance #ScientificPanel

The EU AI Act establishes a complex governance hierarchy with notable power dynamics.

The AI Office

Location: European Commission

Role: Central supervision, GPAI oversight, enforcement coordination

Key Powers:

- • GPAI model evaluation

- • Systemic risk assessment

- • Code of Practice development

- • Cross-border enforcement coordination

Scientific Panel of Independent Experts

Role:

- • Evaluate GPAI models

- • Issue "qualified alerts" regarding systemic risks

- • Provide technical guidance to AI Office

Composition: Independent AI safety and ethics experts

The "Legal Confusion" (Kai Zenner Analysis)

Critical Insight: The AI Office's powers were significantly extended beyond the initial political agreement, effectively diluting the AI Board's influence (representing Member States).

Impact: Member States have lost significant competence in implementation and enforcement as the AI Office replaced the Board in various chapters.

7️⃣ The Cost of Non-Compliance: Penalties & Fines

#AIActPenalties #ComplianceFines #RegulatoryRisk

The financial risks of non-compliance are severe, surpassing even GDPR penalties.

Penalty Structure (Article 99 & 101)

| Violation Type | Maximum Fine | Status |

|---|---|---|

| Prohibited Practices (Art. 5) | €35M OR 7% global turnover | ✅ Active since Aug 2025 |

| High-Risk Non-Compliance | €15M OR 3% global turnover | ✅ Active since Aug 2025 |

| Incorrect Information | €7.5M OR 1% global turnover | ✅ Active since Aug 2025 |

| GPAI Violations | €15M OR 3% global turnover | ✅ Active since Aug 2025 |

⚠️ Always the HIGHER amount applies (unlike SMEs, where the LOWER applies)

Historical Enforcement Examples

🇮🇹 Italy's Aggressive Stance:

- • OpenAI: €15M fine (initially, later negotiated)

- • Luka Inc. (Replika): €5M fine

🇳🇱 Netherlands:

- • Tax Administration: €2.75M fine (algorithmic discrimination scandal)

Beyond Financial Penalties

Market Exclusion:

- • Withdrawal of non-compliant systems from EU market

- • Loss of enterprise contracts requiring compliance certification

Reputational Damage:

- • Public enforcement actions

- • Brand damage and competitive disadvantage

- • Loss of stakeholder trust

Individual Redress:

- • Claims for "serious invasions of privacy"

- • Discriminatory algorithmic profiling lawsuits

- • Class action potential

Cascading Compliance Issues:

- • AI Act violations trigger GDPR investigations

- • Compound regulatory exposure

Even if deadlines shift, the market consequences are permanent: Enterprises demand compliance in RFPs, insurance providers price based on governance maturity, and investors require proof of responsible AI in due diligence.

8️⃣ Your Action Plan: Moving Toward Trustworthy AI

#ActionPlan #ComplianceRoadmap #TrustworthyAI

The path to August 2026 requires commitment to Data Stewardship Values: fairness, transparency, and accountability.

Immediate Actions (Next 30 Days)

1️⃣ Emergency Audit

- ✅ Review all AI systems against Article 5 prohibited practices

- ✅ Verify no prohibited systems are still operational (February 2025 deadline passed)

- ✅ Classify all systems: Prohibited, High-Risk, Limited Risk, Minimal Risk

2️⃣ Post-Market Monitoring Preparation

- ✅ Review February 1, 2026 PMM template

- ✅ Establish monitoring infrastructure

- ✅ Define incident reporting procedures

3️⃣ Governance Structure

- ✅ Appoint AI Compliance Officer

- ✅ Establish cross-functional AI governance committee

- ✅ Define roles and responsibilities (RACI matrix)

Mid-Term Actions (60-180 Days)

4️⃣ Technical Documentation (Annex IV)

- • Build comprehensive documentation for all high-risk systems

- • Implement version control and update procedures

- • Prepare for conformity assessments

5️⃣ Quality Management System

- • Design QMS aligned with ISO 42001 AIMS framework

- • Implement bias testing and validation procedures

- • Establish human oversight mechanisms

6️⃣ Utilize Regulatory Sandboxes

- • Engage with national competent authorities (INESIA in France, Bundesnetzagentur in Germany)

- • Test innovative systems before full deployment

- • Get early feedback on compliance approach

Strategic Actions (By August 2026)

7️⃣ Codes of Practice

- • Join Union-level GPAI code development

- • Influence Article 50 transparency standards

- • Network with industry peers

8️⃣ Third-Party Certification

- • Engage conformity assessment bodies

- • Obtain ISO 42001 certification

- • Build Daiki-certified compliance system

9️⃣ Enterprise Training Program

- • AI literacy for all staff (Article 4 requirement)

- • Specialized training for AI operators

- • Regular compliance updates

🚨 5 Critical Mistakes Enterprises Make

#ComplianceMistakes #AvoidTheseErrors #BestPractices

❌ Treating August 2026 as the start date

✅ Penalties active since August 2025; prohibitions since February 2025

❌ Assuming GDPR compliance covers AI Act

✅ Overlapping but distinct; Annex IV and QMS requirements are new

❌ Ignoring sector-specific requirements

✅ HR, finance, healthcare have additional obligations beyond base Act

❌ Underestimating documentation burden

✅ Annex IV requires extensive technical records; start now

❌ Waiting for perfect clarity

✅ Codes of Practice evolving; act on current guidance, adapt later

🎯 Turn Regulatory Requirements into Competitive Advantage

#CompetitiveAdvantage #TrustworthyAI #EnterpriseLeadership

With 6 months until August 2026 and penalties already active, the window for strategic preparation is closing.

Strategic insight: Even if August 2026 is extended, AI governance is now a permanent market requirement. Your enterprise clients, partners, insurers, and regulators demand it today.

The Trust Dividend - Early compliance creates:

- ✅ Procurement Advantage: Win RFPs requiring AI Act certification

- ✅ Insurance Benefits: Lower premiums for demonstrated governance maturity

- ✅ Partnership Unlock: Enterprise clients require vendor compliance

- ✅ Investor Confidence: Due diligence success signal

- ✅ Brand Leadership: "Trustworthy AI" market positioning

By prioritizing human oversight and technical documentation today, enterprises transform regulatory requirements into competitive advantages of trust.

🆓 Start with a Free Flash Audit

Get your enterprise's EU AI Act compliance readiness score in 10 minutes:

- ✅ System classification and risk assessment

- ✅ Annex IV documentation gap analysis

- ✅ QMS framework evaluation

- ✅ Priority action roadmap with timelines

- ✅ August 2026 readiness score

💼 Or Book Enterprise Consultation

Comprehensive readiness assessment with Daiki Certificate of Readiness for enterprise compliance.

What We Deliver:

- • Complete system audit and risk classification

- • Annex IV technical documentation framework

- • ISO 42001 AIMS implementation

- • Post-Market Monitoring system design

- • 12-month compliance roadmap

- • Executive presentation for board